MBI Videos

Thomas Murray

-

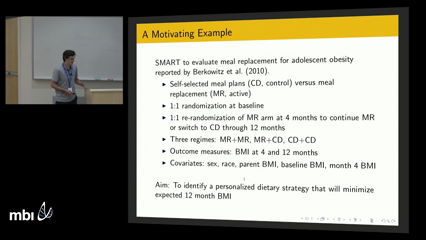

Thomas MurrayMedical therapy often consists of multiple stages, with a treatment chosen by the physician at each stage based on the patient's history of treatments and clinical outcomes. These decisions can be formalized as a dynamic treatment regime. This talk describes a new approach for optimizing dynamic treatment regimes that bridges the gap between Bayesian inference and Q-learning. The proposed approach fits a series of Bayesian regression models, one for each stage, in reverse sequential order. Each model uses as a response variable the remaining payoff assuming optimal actions are taken at subsequent stages, and as covariates the current history and relevant actions at that stage. The key difficulty is that the optimal decision rules at subsequent stages are unknown, and even if these optimal decision rules were known the payoff under the subsequent optimal action(s) may be counterfactual. However, posterior distributions can be derived from the previously fitted regression models for the optimal decision rules and the counterfactual payoffs under a particular set of rules. The proposed approach uses imputation to average over these posterior distributions when fitting each regression model. An efficient sampling algorithm, called the backwards induction Gibbs (BIG) sampler, for estimation is presented, along with simulation study results that compare implementations of the proposed approach with Q-learning.

Thomas MurrayMedical therapy often consists of multiple stages, with a treatment chosen by the physician at each stage based on the patient's history of treatments and clinical outcomes. These decisions can be formalized as a dynamic treatment regime. This talk describes a new approach for optimizing dynamic treatment regimes that bridges the gap between Bayesian inference and Q-learning. The proposed approach fits a series of Bayesian regression models, one for each stage, in reverse sequential order. Each model uses as a response variable the remaining payoff assuming optimal actions are taken at subsequent stages, and as covariates the current history and relevant actions at that stage. The key difficulty is that the optimal decision rules at subsequent stages are unknown, and even if these optimal decision rules were known the payoff under the subsequent optimal action(s) may be counterfactual. However, posterior distributions can be derived from the previously fitted regression models for the optimal decision rules and the counterfactual payoffs under a particular set of rules. The proposed approach uses imputation to average over these posterior distributions when fitting each regression model. An efficient sampling algorithm, called the backwards induction Gibbs (BIG) sampler, for estimation is presented, along with simulation study results that compare implementations of the proposed approach with Q-learning.